We’ve been hearing a lot about “DNS” in the news lately, especially during the October outage, so let’s break down what it is, what it does, and why the entire web can melt into a puddle the moment it misbehaves.

(And just a reminder: if the news throws a tech term at you that feels like a boss fight, ping us on any of our socials. Translating jargon is my love language.)

We love a good metaphor here at CybersecuriTea, so, to make this all easier, we’re going to talk about the internet the way it behaves: like a very busy, very chaotic restaurant (think The Bear).

DNS: the internet’s frantic little expediter

DNS stands for the Domain Name System. Its entire job is to translate human-friendly names (like google.com) into machine-friendly IP addresses (like 8.8.4.4). Computers don’t understand “google dot com.” They understand numbers. DNS is the interpreter (expediter) in the middle.

Every device online has a unique IP address — the same way every table in a restaurant has a number so the server knows where the food should go.

If you want a quick primer, whatismyip.org explains it well, and the best comic-style explainer lives at How DNS Works.

How the DNS keeps the whole restaurant running

Of course, a lot more happens between “I clicked the link” and “the page finally loaded.” Behind the scenes is the equivalent of a full kitchen rush: tickets coming in, orders being prepped, staff juggling timing and sequencing.

This Cloudflare explainer walks through the whole process beautifully. Most people never have to think about any of it — until the day the kitchen printer jams and suddenly nothing is coming out.

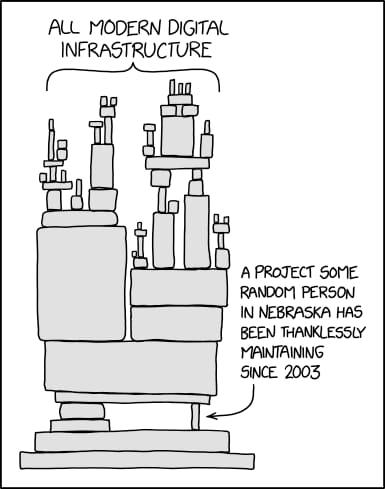

Tech folks have been aware of this fragility for a long time. Randall Munroe even drew an XKCD comic about it:

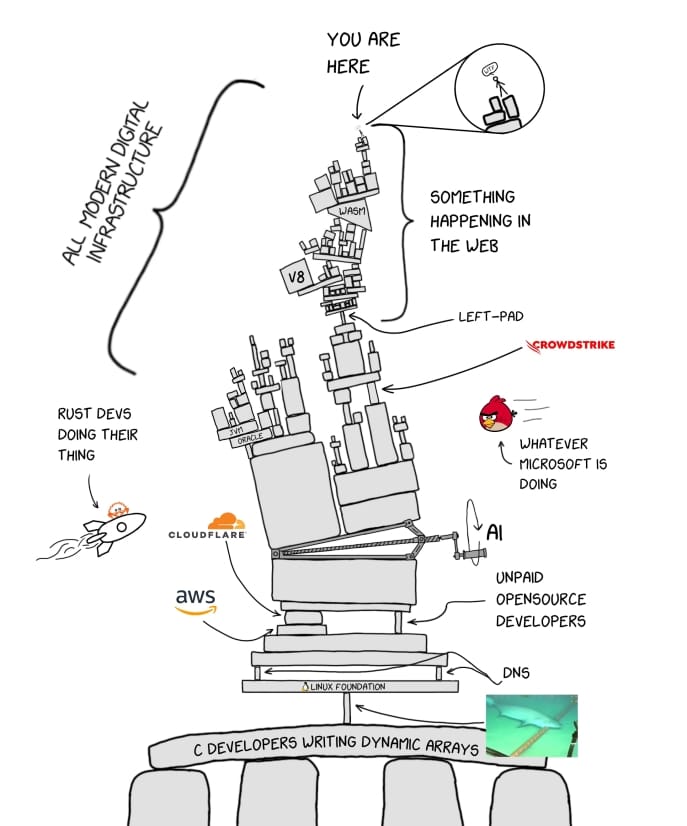

…and people keep remixing it to reflect our current “everything is on fire but it’s fine 😅🔥” era:

How AWS accidentally spilled coffee in the internet’s lap

Amazon summarized the October 19–20 mess here.

In plain English: The system in charge of generating DNS entries – the part that says “order #12 goes to table 5” — stopped working. Completely.

Suddenly:

the restaurant filled up

orders kept coming

but no table numbers were being printed,

so the kitchen had no idea where anything belonged

At that point, the restaurant internet just froze.

Amazon’s write-up is also a museum-grade example of needlessly intimidating and opaque technical prose (a rant for another day). But here’s the truly gnarly part: because so much of the internet runs on AWS, people tried going to other “restaurants”… only to discover they were all relying on the same broken ticket machine.

Everything jammed.

The ticket machine jam that nobody noticed

Many headlines called this a “DNS issue.” That’s not wrong, but it’s definitely not the root cause.

AWS wrote:

“The root cause… was a latent race condition in the DynamoDB DNS management system…”

A race condition is what happens when two parts of a system act at the same time in an order the engineers didn’t expect. Imagine two servers hitting “send to kitchen” at the same time, and the ticket machine having a small existential crisis about which one was first, and outputting nonsense.

A latent race condition means “this was hiding for years.” Which leads to the real question: How did nobody catch this?

As Computerworld pointed out, this wasn’t a quirky DNS mystery. It was the predictable outcome of laying off the senior people who would’ve noticed the issue, prevented it, or fixed it in minutes. You can’t eliminate your senior QA/testing/ops staff and expect the universe to reward you.

In our restaurant metaphor, when you remove the head chef, sous chef, and everyone who remembers where the knives are stored, you don’t get efficiency.

You get mayhem.

Why the restaurant was doomed to chaos before the rush even hit

1. Lack of resilience

This was the classic single point of failure scenario. A huge percentage of global services run on AWS. If you bet everything on a single provider, a single region, or a single system, you’re not building resilience, you’re building that “Mouse Trap” board game contraption that went off if you looked at it wrong.

2. Lack of deep skill

As we covered in When the Firewall Falls, when companies lay off their most experienced people, they also lay off:

institutional memory

engineering intuition

the ability to spot issues early

the ability to fix them fast

If you want a short, unnervingly accurate breakdown of how complex systems fail, read How Complex Systems Fail.

It’s basically “why the kitchen falls apart when the lunch rush hits,” but for infrastructure.

How to keep your own plate safe when the kitchen catches fire

This is why, in The Cyber Chain Reaction, we encouraged you to:

Back up your data in more than one place

Keep paper copies of documents that matter

Assume large systems are not resilient — and buffer your own life accordingly

You can’t stop AWS from splashing scalding coffee on the internet, but you can keep your own digital outfit from getting soaked.

Join us for tea!

CybersecuriTea is a free, plain-English guide to digital safety, designed for families, friends, and the folks you love. Subscribe today and get weekly tips to help keep your digital life secure.

Or, if you’d like to support our work and keep the kettle warm for everyone:

Issue # 19

This content may contain affiliate links. If you choose to sign up or make a purchase through them, we may earn a small commission, at no additional cost to you. Thank you for supporting CybersecuriTea.